There was a point early in console Gen8, where I’d had some involvement in over half the published DRS implementations, anything from providing advice to hands on coding. Subsequently DRS has become a ‘must have’ feature for AAA games, particularly on consoles. There appears to be relatively little technical written about the technique, at least publicaly, and hence perhaps the following might be of interest.

Without DRS console games typicaly have to choose a resolution and level of content, such that the GPU ordinarily runs under the frame time budget, with sufficient overhead to avoid dropped frames when all the action kicks off. For example a 60fps console title might normally run with a GPU time of 14ms, leaving 2.6ms of overhead available for combat effects & etc.. Adhering to this budget while making good use of the GPU frequently takes a lot of development energy and discipline.

The idea of DRS is to lower resolution to avoid dropping frames, or conversely use spare GPU time to maximise image quality. DRS can also provide a development cost benefit, reducing time spent fine tuning content.

1. Viewports vs Memory Aliasing

Creating different size render targets each frame is a non-starter, the cost of runtime memory management is prohibitive, and an out of memory error runtime allocating a render target would be extremely difficult to deal with.

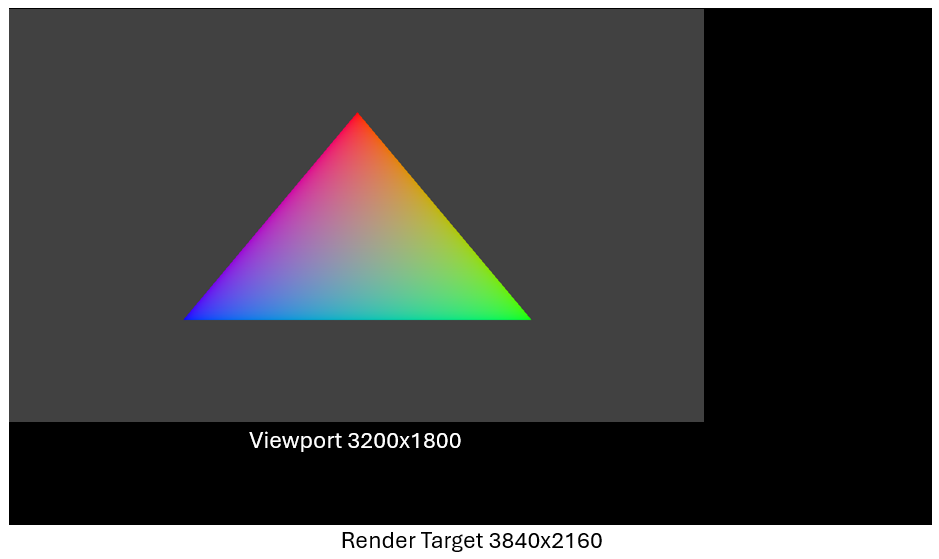

Very early DRS implementations used viewports to render the 3D scene to a limited portion of each render target. (In fact, this was the only way of implementing DRS with DirectX11 on PC) Issues with this approach include setting the viewport at all relevant points in the pipeline, and modify the UVs of any shader reading the render target, to only access the portion you rendered to. This is typically invasive, error prone due to border conditions(*), has hidden performance issues(**) and introduces an ongoing maintenance cost. It might also conflict with other use of viewports, e.g. splitscreen, adding complexity to resolve.

For example, in the above, if you were accessing a pixel with X coordinate of 3199 using UVs, instead of a U of 1.0, you would need a U of 0.833. (Typically you want to address pixel centres making the calculation a little more involved, but you get the idea)

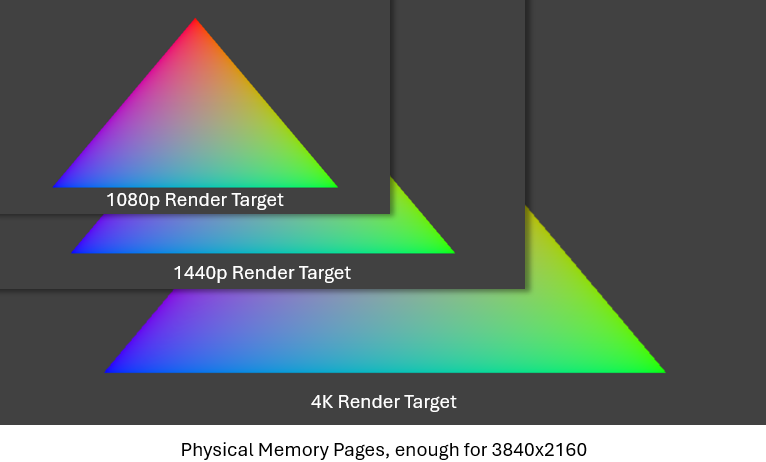

Fortunately with DirectX12, there is a far better solution leveraging memory aliasing. For each render target, instead create an array of render targets of different sizes that alias the same physical memory pages required by the resolution with the highest memory requirement, counter intuitively this might not be the largest size (see Adam Sawaki’s excellent blog), so take the max memory size of each supported size. Selecting which render target to use from this array avoids the use of viewports, without increasing memory requirements.

See ID3D12Device::CreatePlacedResource

* The DirectX specification states that any ‘out of bounds’ access of the render target returns 0, and with render targets aliasing the same memory, you get a consistent out of bounds behaviour no matter what the resolution. With viewports, except at max res, out of bounds access on two edges returns stale data from a previous frame. (It can be surprising how often shaders rely on out of bounds behaviour)

** Clears and lossless render target compression technology are often optimized for a full target clear, rather than a region clear.

2. Which Render Targets?

Typically, the main change to an engine to support DRS is modifying the handling of descriptors for each aliased render target (RTV, SRV, UAV), in such a way that the DRS implementation is hidden from higher level code. That is, a high level render target abstraction object becomes under the hood, multiple DX12 render targets, one for each resolution.

You can either create an array of descriptors, one for each resolution, or create the descriptors on demand for the next frame’s resolution. In the latter case being extremely careful not to overwrite a descriptor the GPU is currently using! Descriptors are small and cheap to create, so there really isn’t much to choose between the two options.

It is necessary to flag which render targets should scale with DRS, which don’t (e.g. shadow maps), and which have DRS, but using last frame’s resolution choice. Those a frame behind typically store some form of history, temporal colour data for anti-aliasing perhaps.

3. Frame time and Resolution Choice

DRS normally has a large range of resolutions to pick from, 2 pixel increments can be a good choice.

Some titles choose to scale only in the horizontal axis, which can be an advantage in maintaining resolution on features that might suffer from geometry aliasing in the vertical axis, e.g. stairs. Another idea is to scale horizontally first, then scale vertically. This creates more usable increments in resolution vs scaling in both axis at the same time.

Of course, you need to decide what the resolution should be for a given frame time. If the GPU time runs over the target frame time, you need to reduce resolution, and visa versa. You need to drop resolution sufficiently to avoid dropped frames, but ideally without compromising visual quality by dropping further than required. Conversely when increasing resolution, its important to do this quickly, being over cautious results in the GPU being under utilised and resolution unnecessarily low. Making sure you don’t either drop resolution too low, or fail to increase resolution quickly enough requires careful checking with a good debug mode, since the problem is non-obvious, at least compared to a frame drop.

Only marginally exceeding frame time does not immediately result in a dropped frame, instead there is a latency in the presentation pipeline you can eat into before a drop occurs. Advanced implementations of DRS might monitor this latency to implement a ‘panic mode’ when latency is low, dropping resolution more aggressively.

Exactly how changing resolution affects the GPU’s frame time unfortunately ‘depends’. Not all GPU tasks scale linearly with resolution, and many do not scale at all. For example, shadow map rendering, particle update, culling or acceleration structure building normally do not scale. There may also be passes that initially scale down nicely with resolution, e.g. g-buffer rendering, but as resolution diminishes, so vertex processing increasingly becomes the bottleneck. That is, GPU time saving from resolution drop is non-linear, varies with content, and will vary depending on technology choices.

A scientific approach for console is to have several automated test points in the game, render these at each resolution and record the GPU time taken. In this way a table can be built of the expected GPU time delta any resolution change might have. This delta will change during development, as different GPU workloads are added or optimised and content is added to the game.

On PC, due to the varying hardware, wide array of graphics option that can be changed at any time, and variable power states, this table is perhaps best dynamically updated. The issue here is that a given resolution may not have been chosen for a while, and the scene may have changed significantly since that resolution was last chosen. A solution is to weight the frame time history with a confidence value, depending on how long it is since that resolution was last chosen. The inverse of this confidence value can be used to extrapolate from recently chosen resolutions, or select some known good default.

Typically, games might aim for a target frame time that is under the target frame rate, e.g. 16ms target for 16.6ms frame time (60fps). If the game consistently runs 0.6ms under the frame time, it will progressively build the maximum possible latency in the presentation pipeline, until the GPU is throttled waiting for an available swap chain buffer. Advanced DRS implementations which monitor this latency, might change the target frame time to be closer to 16.6ms when a large presentation latency exists.

It is important to ensure the CPU obtains GPU frame times with the least delay possible.

4. Frame Time Bubbles

A naive implementation for determining frame time is to add a single timestamp query (D3D12_QUERY_TYPE_TIMESTAMP) at the start and end of the frame, the difference being the time the GPU took to render the frame.

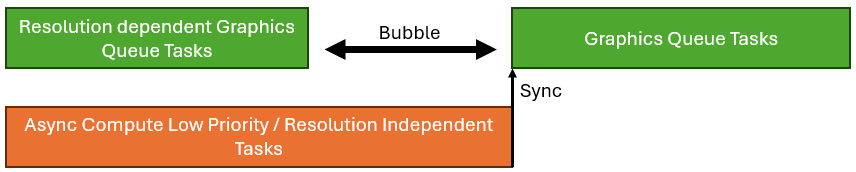

Advanced titles using multiple GPU queues (e.g. graphics and async compute) might want to time only the critical path, using a timestamp query for select ExecuteCommandList calls, and being careful not to double count any time on different queues. Non-critical tasks that might not be timed for example include GI update or sky simulation.

Its possible a title can create a bubble between two command lists, while async compute is operating. But this async compute task might not be a critical task, and in fact the title could run at a higher resolution with little or no adverse affect on frame time, by increasing resolution until the bubble collapses. PIX is great for spotting bubbles.

5. Debugging

I’ve found two debug features to be extremely useful in any implementation. Firstly, a graph over time showing resolution, GPU time and dropped frames. (very similar to the frame time graph Digital Foundry use, but with resolution graphed as well, perhaps the graph Digital Foundry wish they had!)

Naturally you want to optimise the GPU to be consistently busy, without dropping frames. Missing a presentation and dropping a frame is bad, but so is unused GPU time. Not dropping resolution fast enough and missing a frame is obvious, however less obvious is dropping resolution too far, or recovering resolution too slowly.

The second debug feature I’ve found useful is a mode which simply changes resolution every frame, increasing then decreasing, or randomly jumping around. This quickly exposes errors in shaders, or perhaps synchronisation issues which are otherwise hard to find, or hard to reproduce from a test report.

A useful trick I found to surface issues more easily, particularly synchronisation issues (e.g. cache flush) is rendering down to extremely low resolutions, far below the floor the title will actually ship with.

6. Camera Movement

In early DRS implementations there was a view that DRS should be allowed to scale down at any time, but only scale up resolution if the camera is moving or rotating. The issue was that changing resolution with a static camera was considered noticeable, particularly at lower resolutions.

Titles often have infrequent GPU tasks, e.g. updating the sky, causing an occasional GPU frame time spike. This could cause a static camera to pin to a lower resolution than the GPU was normally capable of, causing some observers to wonder if a title actually had DRS at all! Resolution would only increase when the camera was on the move.

I discovered that with modern Temporal Anti-Aliasing or Super Resolution, allowing the resolution to scale even with a static camera can in fact improve image quality. These techniques all implement a sub-pixel jitter to accumulate the ground truth over time. Adding a constantly varying resolution with DRS assists this accumulation process on what you might consider a macro scale.

7. Problem Shaders

It’s common that a title implementing DRS might need to fix a few shaders which incorrectly make some assumptions about resolution. Normally, these would be in deferred passes or post processing. It might be that the shader only works correctly for resolutions that are a multiple of 8 pixels, (most common resolutions are divisible by 8) or perhaps a multiple of 2 pixels. Constraining render target sizes to be a multiple of 2 pixels is reasonable for DRS, 8 pixels less so.

8. User Interface

Games don’t apply DRS to the user interface, and render the user interface at a fixed maximum resolution that frequently differs from the 3D render. There are many different solutions which can achieve this. You can use a second display plane on console, alternatively upscale the image and either render the UI over the top, or composite a UI render target.

Using a display plane or compositing both have the advantage that you can render the UI at a lower framerate than the 3D scene, e.g. 30hz UI update for 60hz gameplay. You also have more choice about when to render the UI in the frame, and therefore perhaps make better use of async compute to do ‘something else’ at the same time as UI rendering. Rendering the UI over the top requires less RAM. As is often the case, there is no right or wrong solution, only trades.

9. CPU load?

One interesting idea is if the CPU running over the frame budget but the GPU is not, let DRS increase resolution and deliberately run over time, to match the time the CPU is taking. If doing this, be careful to filter out any big CPU spikes!

One issue with this trick is, if a future patch improves CPU peformance, the title maybe be criticised for worsening GPU performance evidenced by a lower resolution, when GPU performance is in fact unchanged. This trick can also cause issue for content optimisers who believe an area is good because the resolution is good. I recommend if you are going to implement this GPU time overrun to match CPU time, its in released code only, and not development builds used for content building/optimisation, where the CPU is not a final performance..

10. The Future

One idea with some adoption, is to scale settings as well as resolution e.g., LOD swap distances, VRS tolerances etc..

A second interesting idea is to apply DRS not only to the internal resolution the title is rendered at, but to the output resolution also. ‘Dual DRS’ perhaps? For example, 1800p is often cited as ‘enough’ resolution that a player cannot notice the difference between 1800p and 4K on a console at living room distance, even with a large TV. (Of course, you can tell with a magnifying glass!) Typically, upscaling happens ‘mid frame’, meaning there a number of expensive post processing passes that happen after upscaling, particularly any which blur the image. Running these at 1800p instead of 4K means processing only 69% of the pixels, and a decent speed up that can be invested instead in increasing the internal resolution, the result of which can be a net higher image quality. However, if the title has even more GPU time spare, it could increase the 1800p output resolution towards 4K as a second DRS range, that perhaps kicks in when internal resolution exceeds say 1440p.